Frontend API with Nuxt + Nitro = Flexibility 🐙

The question is always where to fix a problem. For example, if data is missing from an API, you could fix that with a second request or change the response of the first request. So in a headless setup, you always want to have the minimum amount of data you need, but things will always change and you need more data or data in a different structured form. So you might find yourself talking to backend developers to optimize the API, or moving logic to the frontend that doesn’t actually have anything to do with the frontend. So in this blog post, let’s look at how we can add a layer between frontend and API to transform and optimize our data according to our needs. Plus we can add caching (if needed) very easily to make it blazing fast.

SetupLink to this section

We are using the Demo-Store from Shopware Frontends.

Basicly the following examples rely on:

The good thing is, with Shopware Frontends and the Demo-Store template you do not need to add any additional packages. Everything is already there you just need to use the system you already have.

Nuxt - Server DirectoryLink to this section

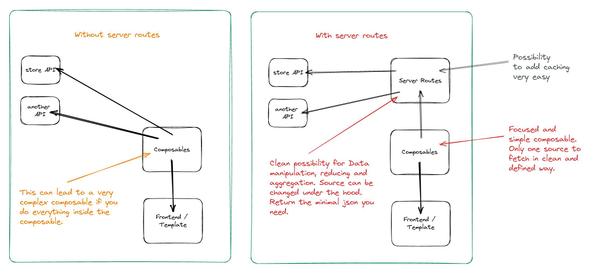

In Nuxt’s server directory, you can register your own API routes. So instead of calling external APIs directly from your application, the application always calls its own API routes. What happens inside these server routes doesn’t really matter. The only thing that matters is that the response data must remain the same when you rely on them (the structure shouldn’t break, otherwise you’ll have to update the frontend templates/logic as well). But it gives you the freedom you can call several different APIs in that server route and then create a minimal json that your frontend can consume. So keep in mind that the following examples will take place in the nuxt server directory.

Nitro - Storage LayerLink to this section

If you from the PHP world then you maybe know flysystem? It is an abstract file system layer and very popular 🙂 Thx to Frank de Jonge for this. The Nitro Storage Layer follows the same approach and can be used with different Drivers for example Redis, Azure, Memory, Filesystem, Databases, and many more. Internally the Storage Layer uses an instance of the unstorage package. So you should check the documentation if you want to use some specific driver.

How to configure Nitros Storage Layer?Link to this section

Here you have an example of how to configure the Storage Layer with the name “cache” in Nuxt. So you can have multiple different storage layers and you can have different configs depending on prod or dev envoirment.

// nuxt.config.ts

nitro: {

storage: {

cache: {

driver: "planetscale",

url: process.env.PLANETSCALE_URL,

table: "storage",

},

},

devStorage: {

cache: {

driver: "redis",

url: "redis//localhost:6379",

},

},

},How to get an Item from Nitros Storage Layer?Link to this section

This example is taken from a server route that expects a post request with a slug in the body/payload. So we take the slug, generate our cache key, and then search with that key in the storage. If we find an item we will return this item as a type of SeoUrl.

const body = await readBody(event);

const slug = body?.slug;

const key = 'cache:nitro:functions:seoUrl:' + slug + '.json';

const storage = useStorage();

const item = (await storage.getItem(key)) as Item;

if (item) {

return item.value.elements[0] as SeoUrl;

}What you do not see … is that if we do not find any item, in the cache, we will call the API endpoint to get the data (SeoUrl) we need. So you can use nitros storage layer in front of any API call to create a cache if needed. This is also possible in other places than server routes, there you just need to import unstorage and create or use the storage (if already created).

Nitro - Cache APILink to this section

So why should I use server routes instead of calling directly the API I need?

Let’s have a look at the image of this blog post.

If you have dedicated composables that are doing one thing in a simple way, then not using server routes is fine I would say. I just want to mention that you have the possibility to move data fetching and data transforming out of your composables into the server routes. This will also give you the possibility to add Nitro’s cache API there (if needed). This can help you to have a cleaner composable and/or also to have fewer composables at the end.

What to use Nitro’s Cache API for?Link to this section

- Creating API Endpoints in your Nuxt Application

- Is built on top of the Storage Layer

- Provides cachedEventHandler and cachedFunction

- Route Rules (complete cached routes via config, is experimental)

Code ExampleLink to this section

In this example, we are using the cachedFunction that is available inside the utils folder under the server routes. You could also use cachedEventHandler inside your custom server route but if you want to use the same function again it could be better to have it in a separate utils file to import it multiple times (somewhere else).

The most important thing is that we wrap our function, that is doing the API call, into the cachedFunction. We can also pass options like maxAge, name, and getKey as object. Check the Documentation for more like shouldBypassCache or shouldInvalidateCache functions.

// templates/vue-demo-store/server/utils/navigation.ts

export const getCachedMainNavigationResponse = cachedFunction(

async function loadNavigationElements({ depth }: { depth: number }) {

const runtimeConfig = useRuntimeConfig();

try {

const navigationResponse = await $fetch(

runtimeConfig.public.shopware.shopwareEndpoint +

'/store-api/navigation/main-navigation/main-navigation',

{

method: 'POST',

body: {

depth: depth,

},

headers: {

'Content-Type': 'application/json',

'sw-access-key': runtimeConfig.public.shopware.shopwareAccessToken,

'sw-include-seo-urls': 'true',

},

},

);

return (navigationResponse as UseNavigationReturn) || [];

} catch (e) {

console.log(e);

console.error('[useNavigation][loadNavigationElements]', e);

return [];

}

},

{

maxAge: 60 * 60, // we cache it for 1 hour

name: 'mainNavigation',

getKey: (repo: string) => repo,

},

);After we created our function getCachedMainNavigationResponse we want to use it in some server route. For this, we create the get-main-naigation.get.ts file as shown below. The .get in the file name will tell nuxt to accept only GET requests for this route/file.

// templates/vue-demo-store/server/routes/store-api/get-main-navigation.get.ts

// @url http://localhost:3000/store-api/get-main-navigation (GET)

import { getCachedMainNavigationResponse } from '../../utils/navigation';

export default eventHandler(async (event) => {

const query = getQuery(event);

const depth = query.depth ? parseInt(query.depth as string) : 2;

const response = await getCachedMainNavigationResponse(depth);

return response;

});The logic we moved to the navigation.ts file was part of a composable see packages/composables/src/useNavigation.ts. Instead of using the original composable we just created a new one (see below), which we can then use in our template (useCachedMainNavigation instead of loadNavigationElements).

// templates/vue-demo-store/components/server/useCachedMainNavigation.ts

import axios from 'axios';

export async function useCachedMainNavigation(depth: number) {

const storeApiUrl =

getOrigin() + '/store-api/get-main-navigation?depth=' + depth;

const response = await axios.get(storeApiUrl);

return response.data;

}Yes, this looks like a lot of work and is not that easy when you already have a defined structure but the main problems I had were related to TypeScript because I needed to return the same types as before. Also, there were some problems with calling our own server routes depending on DEV or PROD environment (fixed this with a custom getOrigin function, see below).

// @ToDo: This is a hacky way. Maybe just using a runtimeConfig for that.

function getOrigin(): string | undefined {

let origin = '';

const nuxtApp = useNuxtApp();

const isDeV = process.env.NODE_ENV !== 'production';

if (process.server) {

if (nuxtApp.ssrContext?.event.node.req.headers.host !== 'undefined') {

const location = isDeV ? 'http://' : 'https://'; // in dev mode we need to use http

const host = nuxtApp.ssrContext?.event.node.req.headers.host;

origin = location + host;

}

} else {

origin = window.location.origin;

}

return origin;

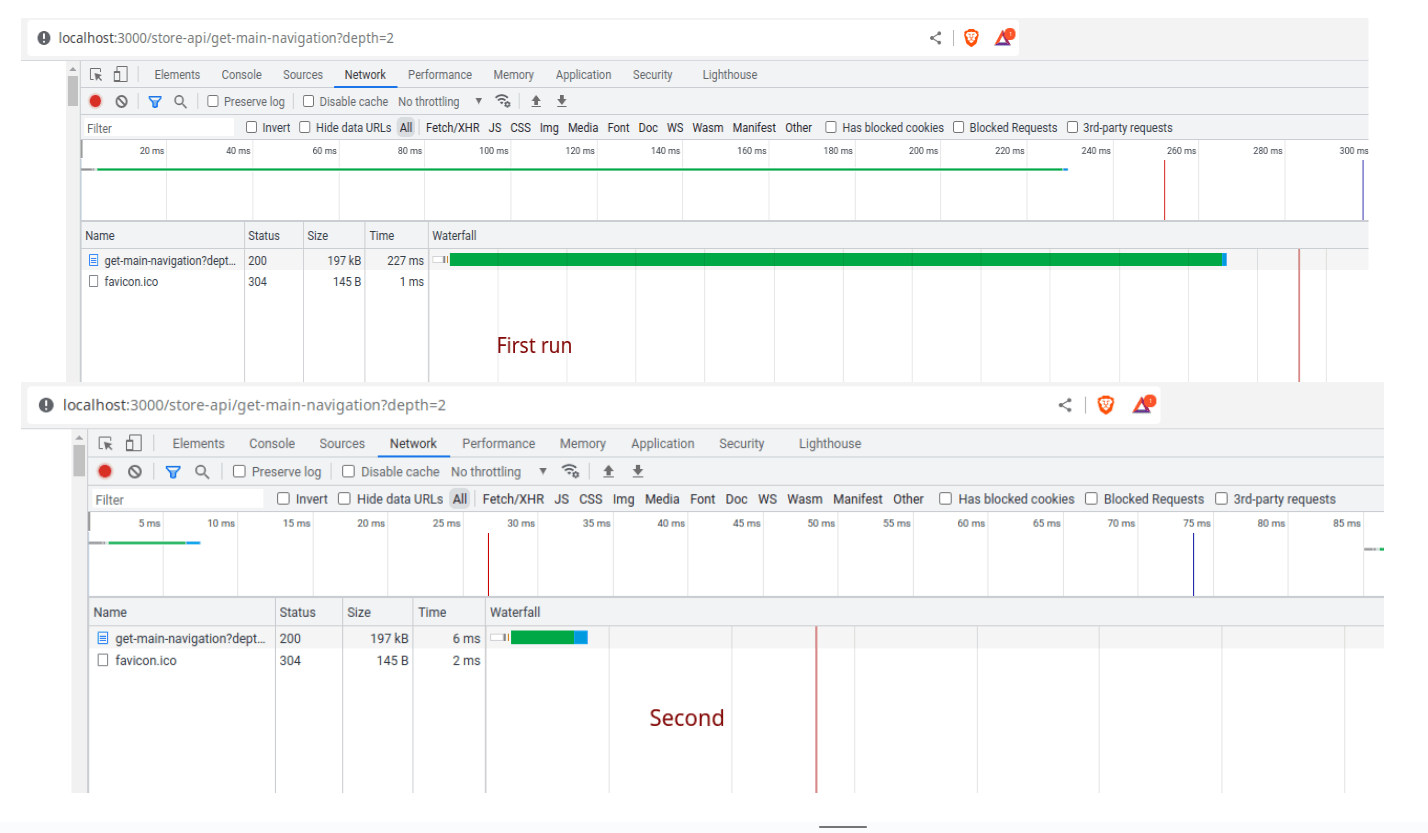

}**Now let’s look at the result.**🚀

The first run is without cache (see mostly same time like store API response time) and the second run is the cached result coming out of memory in DEV environment.

How to clear the cache?Link to this section

For testing it is sometimes useful to clear the cache 😉 So here you have an example for a server route where you can pass the seoURL a parameter to clear the cache when the seoURL is found in the cache key. If you want to see the full list of cachKeys just put some console logs inside the loop.

// templates/vue-demo-store/server/routes/cache/seo-url-clear-cache.get.ts

// use this route to clear cache for a specific seo url or all seo urls

// @url http://localhost:3000/cache/seo-url-clear-cache?cacheKeyName=seoURL (GET)

export default eventHandler(async (event) => {

// @todo: protect access to this route (?)

const query = getQuery(event);

const cacheKeyName = (query.cacheKeyName as string) || '';

const storage = useStorage();

const cacheKeys = await storage.getKeys('cache/nitro');

let deletedKeys = [];

for (let i = 0; i < cacheKeys.length; i++) {

const key = cacheKeys[i];

if (key.includes(cacheKeyName)) {

await storage.removeItem(key);

deletedKeys.push(key);

}

}

return { clearedItems: deletedKeys.length };

});How to warm up cache?Link to this section

Sure you can wait for the first users to warm up you cache, but you could also create a cronjob or call some URL after the build is finished to warm up the cache for your users. So here you have an example server route that will warm up all SeoURL’s and move the result into the cache (see getCachedSeoUrlResponse). We use pagination and will always get SeoURL’s in 100er batches.

// templates/vue-demo-store/server/routes/cache/seo-url-warm-up-cache.get.ts

// @url http://localhost:3000/cache/seo-url-warm-up-cache (GET)

export default eventHandler(async (event) => {

const query = getQuery(event);

let limit = (query.limit as number) || 100;

let response = await getSeoUrlPaginationResponse(1, limit);

if (typeof response === 'undefined' || response.total === 0) {

return response;

}

const maxPages = Math.ceil(response.total / response.limit);

let elementsCount = 0;

const start = performance.now();

for (let currentPage = 1; currentPage <= maxPages; currentPage++) {

if (currentPage > 1) {

response = await getSeoUrlPaginationResponse(currentPage, limit);

}

for (let i = 0; i < response.limit; i++) {

const element = response.elements[i];

if (typeof element !== 'undefined') {

elementsCount++;

const slug = '/' + element.seoPathInfo;

await getCachedSeoUrlResponse(slug);

}

}

}

const end = performance.now();

const performanceTime = `Execution time: ${((end - start) / 1000).toPrecision(3)} seconds.`;

return { performanceTime, elementsCount };

});TL;DR - LearningsLink to this section

- This experiment helped me a lot to understand server routes, nuxt, and nitro. Please see the code examples like a beta release and feel free to comment for improvement or ask your questions.

- Caching does not help with slow 3G connections. 😵

- For slow 3G the bandwidth and the latency is slower, so if you do not cut down the file size, also a faster response is still slow on 3G.

- What you need to fix slow 3G is Adaptive loading.

- You can use the Storage Layer also without API Endpoints.

- You can easily create your own API Endpoints with Nuxt.

- Use Caching API Endpoints to transform objects to reduce the json file size.

- Combine multiple requests to one new minified object.

- At Vercel with Hobby Account, you only have 10 sec of serverless functions. Useless for cache warmup.

- Planetscale can be used as Storage for Cache, see also the other supported drivers.